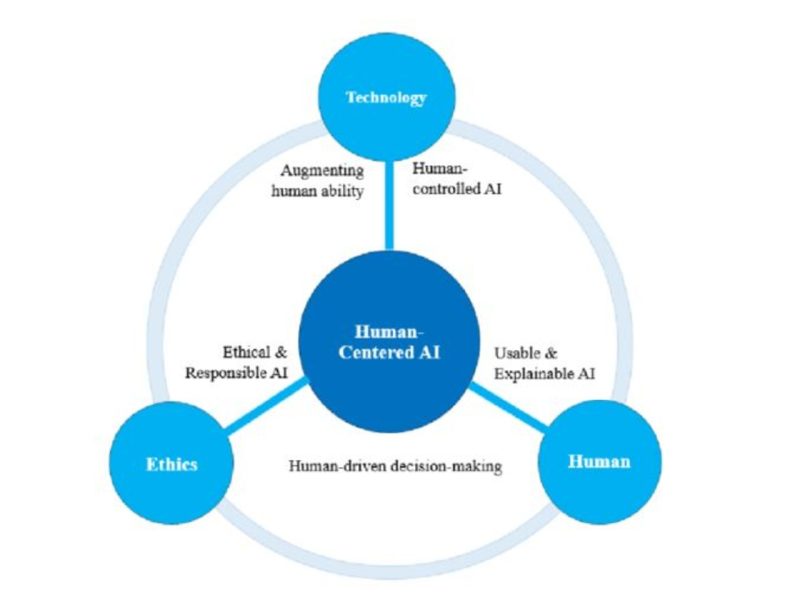

Human-centered AI (HCAI) refers to the development of artificial intelligence (AI) technologies that prioritize human needs, values, and capabilities at the core of their design and operation. This approach ensures teams create AI systems that enhance human abilities and well-being rather than replacing or diminishing human roles. It addresses AI’s ethical, social, and cultural implications and ensures these systems are accessible, usable, and beneficial to all segments of society. HCAI is linked to Human-AI interaction, a field that examines how AI and humans communicate and collaborate.

In this video, Netflix Product Design Lead Niwal Sheikh talks about what HCAI is.

In Human-Centered AI, designers and developers engage in interdisciplinary collaboration and often involve psychologists, ethicists and domain experts to create transparent, explainable and accountable AI. The Human-Centered AI approach aligns with the broader movement towards ethical AI and emphasizes the importance of AI systems that respect human rights, fairness, and diversity.

Why Is Human-Centered AI Important?

Human-centered AI is crucial because it ensures that AI systems focus on human needs and values. To incorporate human-centered design in AI means to involve users actively in the development process. This collaborative approach leads to more effective and ethical solutions as it harnesses diverse perspectives and expertise. For example, when teams involve users from various backgrounds, they can help identify and mitigate biases in AI algorithms, leading to more equitable outcomes.

Moreover, human-centered AI fosters trust and acceptance among users. When people understand and see the value of AI systems, they are more likely to adopt and support these technologies. This trust is essential for the successful integration of AI into everyday life.

Human-Centered AI vs. Traditional AI: What’s the Difference?

Traditional AI emphasizes task automation for efficiency, while Human-Centered AI prioritizes human needs, values and capabilities. In contrast to traditional AI, Human-Centered AI aims to augment human capabilities rather than replace them. This design philosophy prioritizes understanding and respecting human needs to ensure that AI systems are accessible, user-friendly, and ethically aligned.

In HCAI, teams actively involve users in the design process to create solutions finely tuned to real-world needs. Ethical considerations within HCAI address privacy, fairness and transparency, preventing biases and ensuring accountable and explainable AI decisions. HCAI systems adapt and learn from human behaviors and are context-aware. HCAI integrates psychology, sociology, and design for a holistic understanding of human-AI interaction.

Several examples illustrate the differences between Human-Centered AI (HCAI) and traditional AI:

- Personalized learning systems: In education, traditional AI might focus on the automation of grading or generic educational content. HCAI, in contrast, creates adaptive learning platforms that adjust content and teaching styles to fit individual student’s learning patterns, preferences, and needs. This approach enhances the learning experience and outcomes, clearly understanding and adapting to human behaviors and preferences.

- Healthcare applications: Traditional AI might focus on maximizing efficiency in data processing and diagnostic procedures. HCAI, on the other hand, may not only assist in diagnosis but also consider patient comfort, privacy and emotional well-being. For example, AI tools in mental health are designed to provide therapy and support in a manner that is sensitive to and respectful of the patient’s psychological state.

- Automotive industry: In traditional AI, the focus might be to create fully autonomous vehicles. HCAI takes a different route and aims to develop advanced driver-assistance systems (ADAS) that enhance driver safety and comfort, ensuring that the technology serves the driver rather than replacing them. These systems can adapt to individual driving styles and provide intuitive assistance, ensuring a harmonious interaction between humans and machines.

- Customer service: Traditional AI deploys chatbots and automated systems that focus solely on efficiency. HCAI, however, designs these systems to understand and respond to human emotions, providing a more empathetic and personalized customer experience. These AI systems can detect customer frustration or confusion, adapt their responses accordingly, or even escalate to a human operator when necessary.

- Smart home devices: Traditional AI might focus on automation and control of home devices. HCAI, in contrast, designs smart home systems that learn from and adapt to the residents’ routines and preferences. It creates an environment that is not only efficient but also comfortable and conducive to the well-being of its inhabitants.

What Is Ethical AI Design?

Ethical AI encompasses principles and guidelines that address potential biases and ensure transparency; it fosters accountability, promotes fairness, and safeguards privacy.

The main principles of Ethical AI are:

Transparency in AI

Transparency is a cornerstone of ethical AI; it emphasizes the importance of openness in the design, development, and deployment of AI systems. Transparent AI systems provide clear insight into their decision-making processes and allow users and stakeholders to understand how the AI draws conclusions.

Transparency is essential to build trust in AI applications, as it enables users to comprehend the rationale behind AI-generated outcomes; it helps mitigate concerns related to some AI algorithms’ “black box” nature. Transparency is particularly critical in applications with significant societal impact, such as healthcare, finance, and criminal justice.

For example, OpenAI (the creators of the generative AI program, ChatGPT) emphasizes openness and provides access to the model’s codebase, which enables users to understand how their AI system works. This commitment to transparency empowers developers to explore, critique, and contribute to the model’s evolution and fosters a collaborative and accountable AI ecosystem.

Accountability in AI

Accountability involves assigning responsibility for the actions and decisions made by AI systems. Ethical AI frameworks prioritize clear lines of accountability, which ensures that individuals or entities are answerable for the outcomes of AI applications. This accountability extends across the entire AI lifecycle, from design and training to deployment and monitoring. When stakeholders are accountable, they are incentivized to prioritize fairness, equity, and the ethical use of AI. This accountability-driven approach is essential to build a robust ethical foundation in AI.

For example, companies that use AI-driven recruitment tools must take responsibility for the impact of these tools on diversity and inclusion. Transparent reporting and regular audits can hold organizations accountable, mitigate biases and ensure fair employment practices.

Fairness in AI

Fairness in AI emphasizes the equitable treatment of individuals, irrespective of their demographic characteristics. Ethical AI frameworks prioritize the identification and mitigation of biases and ensure that AI systems do not perpetuate or exacerbate existing societal inequalities. Biases in training data or algorithmic decision-making can result in unequal treatment and reinforce societal prejudices. Ethical AI demands continuous efforts to address and rectify biases, promoting inclusivity and fairness in diverse contexts.

For example, due to historical biases in training data, facial recognition systems have exhibited racial and gender disparities. Ethical considerations demand ongoing refinement and validation to ensure that these technologies treat all individuals fairly.

Privacy in AI

Privacy is a fundamental ethical principle, particularly in AI applications that involve personal data. User privacy consists of safeguarding sensitive information, implementing secure data practices, and providing users with control over their data. AI systems often rely on vast amounts of data to operate effectively. Ethical AI frameworks prioritize privacy protections to prevent unauthorized access, misuse, or unintended disclosure of sensitive data.

For example, healthcare AI applications like diagnostic tools and personalized medicine involve sensitive patient data. Ethical considerations demand robust privacy measures, including encryption, secure storage, and strict access controls, to protect individuals’ medical information and maintain the confidentiality of health-related data.

In this video, Niwal Sheikh talks about how to put ethical AI at the forefront.

What Are the Principles of Human-Centered AI Design?

The fundamentals of human-centered AI design are rooted in the following key principles:

Empathy and Understanding the User

Understanding the needs, challenges, and contexts of the users is paramount. Designers must empathize with users to create AI solutions that genuinely address their problems and enhance their lives. For example, an HCAI healthcare app should be based on in-depth interviews with patients and doctors. It should understand and anticipate the unique needs of different patients, such as medication reminders for elderly users, and ensure a personalized and empathetic user experience.

Ethical Considerations and Bias Mitigation

Ethical considerations like privacy, transparency and fairness are crucial in human-centered AI. Designers must actively work to identify and mitigate biases in AI algorithms to ensure equitable outcomes for all users. For example, IBM Watson Health analyzes patient data to assist in diagnosis and treatment planning. They prioritize ethical AI, ensure data privacy and strive to reduce biases in their algorithms, which promotes fair medical treatment for all patients.

User Involvement in the Design Process

Involving users in the development process is vital for creating AI systems that are genuinely beneficial and user-friendly. This participatory approach ensures the solutions are tailored to real-world needs and preferences. For example, designers should involve users from various demographics in the testing phase to create a voice assistant. This feedback helps refine the assistant’s responses, making it more responsive and valuable to a broader user base.

Accessibility and Inclusivity

AI systems should be accessible to and usable by as wide a range of people as possible, regardless of ability or background. This inclusivity ensures that the benefits of AI are available to everyone. For example, an AI-powered educational platform with features like text-to-speech and language translation makes it accessible to users with disabilities and those who speak different languages, thereby fostering inclusivity.

Transparency and Explainability

Users should be able to understand how AI systems make decisions. Transparent and explainable AI fosters trust and allows users to interact with AI systems more effectively. For example, a financial AI system provides users with clear explanations of how it analyzes data to offer investment advice. This transparency helps users trust and understand the AI recommendations and enhances user experience.

Continuous Feedback and Improvement

Human-centered AI is an iterative process that involves continuous testing, feedback, and refinement. This approach ensures that AI systems evolve in response to changing user needs and technological advancements. For example, Tesla’s Autopilot technology aims to continuously improve through over-the-air software updates based on real-world driving data and user feedback, which enhances safety and performance over time.

Balance between Automation and Human Control

While AI can automate many tasks, it’s essential to maintain a balance where humans remain in control, especially in critical decision-making scenarios. This balance ensures that AI augments rather than replaces human capabilities. For example, in an autonomous vehicle, while the AI handles navigation, there should always be the option for the driver to take manual control. This balance ensures safety and keeps the human in command during critical situations.

Case Studies: Successes and Challenges

In this video, Niwal Sheikh talks about the implementation of human-centered AI.

There are several notable case studies where Human-Centered AI (HCAI) has been successfully implemented in design:

- IBM’s AI for Fashion: IBM collaborated with fashion houses to develop AI systems that analyze fashion trends, customer preferences, and social media data. This HCAI approach allows designers to create more personalized and trend-responsive collections, which enhances customer satisfaction and business performance.

- Google’s AI-Powered User Experience: Google has implemented AI in its UX design, particularly in products like Google Assistant and Google Photos. These applications use AI to understand user preferences and behaviors, offering personalized and intuitive user experiences tailored to individual users, such as voice recognition and automated photo tagging.

- Autodesk’s Generative Design: Autodesk uses AI in its generative design software, allowing designers to input design goals and parameters. The AI then generates multiple design options, optimizing for specific objectives such as material usage, weight, and cost. This approach streamlines the design process and leads to innovative solutions that might not have been considered otherwise.

- Spotify’s Personalized Recommendations: Spotify employs AI to analyze listening habits and preferences, providing highly personalized music recommendations. This user-centered approach enhances user experience by tailoring content to individual tastes, demonstrating how AI can be used to deeply understand and respond to user needs.

- Healthcare AI for Patient-Centered Care: AI is increasingly used to provide patient-centered care. For example, AI algorithms are used to analyze patient data and assist in diagnosing diseases more accurately and quickly, improving patient outcomes and experiences.

Despite these successes, it’s essential to acknowledge the challenges to implement HCAI. The case of facial recognition technology exemplifies the development of biased algorithms. Apps like FaceApp have faced criticism for perpetuating gender and racial biases in their image-processing algorithms. These challenges underscore the importance of continual refinement in HCAI and emphasize the need for ongoing scrutiny, transparency, and iterative improvement.

Human-Centered AI: What’s Next?

As Human-Centered AI evolves, several key trends and developments are likely to emerge. In this video, Niwal Sheikh talks about the future of human-centered AI.

Emergent Technologies

The future trajectory of Human-Centered AI is closely tied to advancements in emerging technologies. Natural language processing (NLP) is advancing rapidly, with applications like Grammarly using AI to understand and enhance user writing. This ensures more natural and effective communication, aligning with the principles of HCA. Another example is Replika, an AI chatbot designed to engage users in emotionally supportive conversations, showcasing the integration of emotional intelligence in AI.

Global Adoption and Ethical AI

As HCAI gains global traction, its principles are expected to play a pivotal role to shape the broader AI landscape. There will be a stronger focus on developing AI that adheres to ethical standards, prioritizes human rights, and mitigates biases. This includes designing algorithms that are fair, transparent and accountable.

Governments and organizations recognize the importance of ethical AI, with frameworks like OpenAI Codex incorporating ethical guidelines into AI development. This global adoption ensures that AI applications align with ethical standards, fostering responsible and inclusive technology.

Collaboration Between Humans and AI

The future of HCAI envisions even deeper collaboration between humans and AI. These systems will be designed to understand and predict human needs and work seamlessly alongside humans. Augmented intelligence is exemplified by applications like Runway ML, which provides a platform where users can experiment with various machine learning models, emphasizing the collaborative potential of AI in creative fields.

Interdisciplinary Collaboration

The field will witness increased collaboration between technologists, designers, psychologists, ethicists, and other stakeholders to ensure that AI systems are designed with a comprehensive understanding of human contexts and needs.

Emphasis on Explainable AI

There will be a growing demand for AI systems that can explain their decisions and actions in a way that is understandable to humans, enhancing trust and reliability.

Where to Learn More about Human-Centered AI

Watch our Master Class webinar, Human-Centered Design for AI, with Netflix Product Design Lead Niwal Sheikh.

Take our AI for Designers course to learn more about AI.

To know more about AI’s future, read What’s Next for AI.