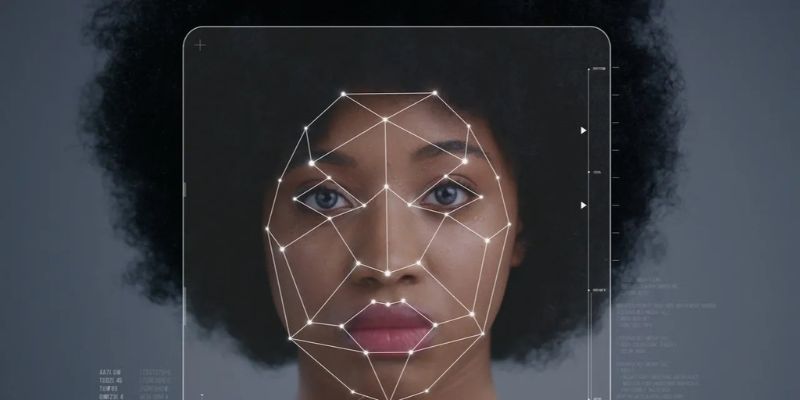

As artificial intelligence (AI) continues to advance and shape various aspects of our lives, concerns surrounding AI bias have become increasingly prominent. The National Institute of Standards and Technology (NIST) recently published a report that sheds light on the issue, emphasizing that AI bias is not solely a result of biased data. Instead, the report highlights the role of human biases and systemic, institutional biases in fueling AI bias.

Looking Beyond Data: Understanding the Broader Factors

The NIST report recommends broadening our perspective on AI bias by considering the societal factors that influence the development and use of AI technology. This recommendation is a core message of the revised publication, “Towards a Standard for Identifying and Managing Bias in Artificial Intelligence.” The document reflects public comments received by NIST on its initial draft version released last summer.

The revised report focuses not only on AI algorithms and the data used to train them but also on the societal context in which AI systems operate. According to Reva Schwartz, principal investigator for AI bias at NIST and one of the authors of the report, “Context is everything.” In order to develop trustworthy AI systems, it is crucial to consider all the factors that can impact public trust in AI. These factors extend beyond the technology itself to the broader impacts and implications of AI on society.

The Role of Human and Systemic Biases

While it is widely known that AI systems can exhibit biases based on their programming and data sources, the NIST report emphasizes that these computational and statistical sources of bias do not represent the complete picture. It highlights the significance of human and systemic biases in shaping AI bias.

Systemic biases arise from institutions operating in ways that disadvantage certain social groups, such as racial discrimination. On the other hand, human biases relate to how individuals use data to fill in missing information, which can result in biased decisions. When these human, systemic, and computational biases intertwine, they can create a harmful mix, especially when there is a lack of explicit guidance on addressing the risks associated with the use of AI systems.

A Socio-Technical Approach to Mitigating AI Bias

To address the complex issue of AI bias, the NIST report advocates for a “socio-technical” approach. This approach recognizes that AI operates within a larger social context and that purely technical solutions are insufficient to tackle bias effectively.

Schwartz emphasizes that organizations often rely too heavily on technical solutions for AI bias issues. However, these measures fail to capture the societal impact of AI systems. Given the expansion of AI into various aspects of public life, it is crucial to consider AI within the larger social system in which it operates.

Socio-technical approaches in AI are an emerging area, and effectively addressing AI bias will require collaboration across multiple disciplines and stakeholders. Bringing in experts from various fields and listening to different organizations and communities are paramount in understanding and mitigating the impact of AI bias.

FAQs

Q: Is AI bias solely a result of biased data?

A: No, AI bias encompasses more than just biased data. The NIST report emphasizes the role of human biases and systemic, institutional biases in contributing to AI bias.

Q: How can we effectively address AI bias?

A: The NIST report suggests a socio-technical approach. This approach considers the larger societal context in which AI systems operate and acknowledges that purely technical solutions are insufficient to mitigate bias effectively.

Conclusion

The NIST report on AI bias highlights the need to look beyond biased data and delve into the broader societal factors that contribute to AI bias. By understanding the role of human biases and systemic biases, we can work towards developing trustworthy and responsible AI systems. Taking a socio-technical approach and engaging experts from various fields will be crucial in successfully mitigating AI bias. As AI continues to shape our world, addressing bias is essential to ensure fair and equitable outcomes for all.